Artificial intelligence (AI) has quickly become a prolific aspect of modern daily life, from AI learning tools like ChatGPT and Grammarly to embedded AI features in Google and Snapchat. As AI’s utilization becomes increasingly widespread, discussions should be held surrounding the implications of using such tools. Americans and the media tend to fear the idea that AI will gain sentience or power over the livelihoods and thoughts of individuals, but there needs to be a shift in concern to how AI may be used to spread potentially fascist political agendas through social media platforms. Unregulated AI algorithms could spread misinformation and insight extremism that suits one political identity.

To understand why these are pressing concerns globally among professors and AI experts alike, there must be a distinction about how AI functions and how its mechanisms — without proper oversight — could lead to the spread of fascist ideologies.

According to the National Artificial Intelligence Initiative Act of 2020, AI is defined as a “machine-based system that can, for a given set of human-defined objectives, make predictions, recommendations or decisions influencing real or virtual environments.” When AI is stripped down to its basic parts, it is merely a facet of the greater tree of computational systems and cannot directly influence the sociopolitical space by itself. However, under the dictation of political parties that wish to use such a tool to influence the public sphere, it can be equally powerful and destructive.

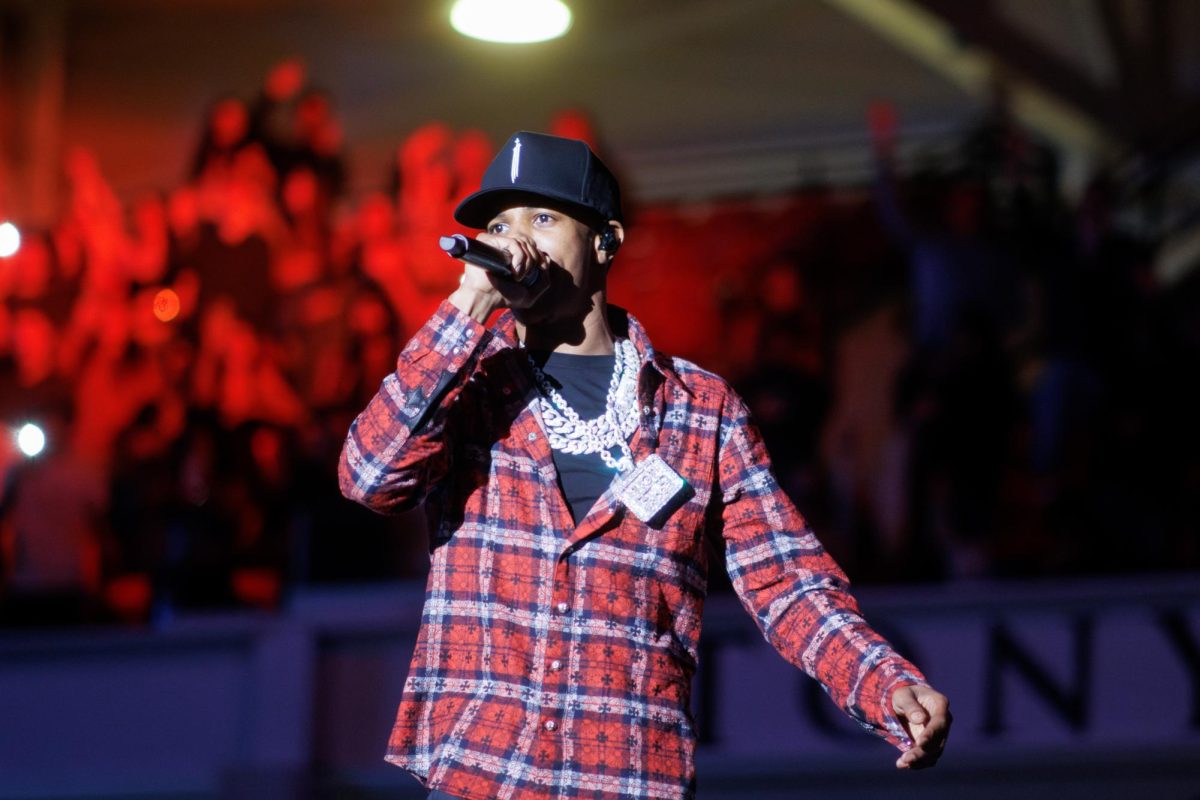

“AI has arrived at a level where people find it useful in ways that were previously unknown,” Andrew Schwartz, professor in the Department of Computer Science, said. “It has become general enough to where people are finding new applications for it that developers couldn’t anticipate.”

But how does this possibility lead to the fear of AI being used to encourage fascist ideologies?

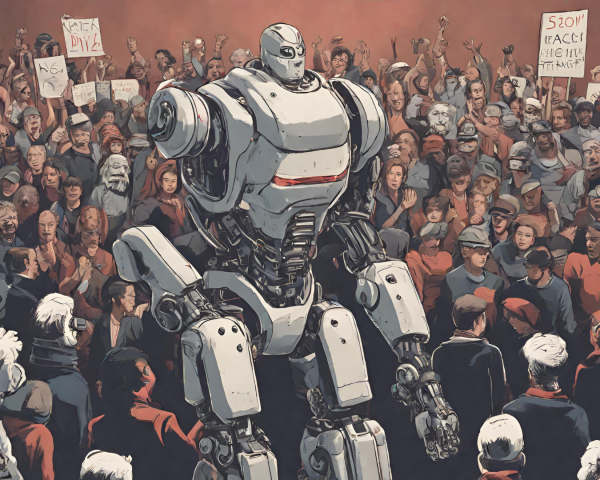

The rise of fascist parties has been a decades-long phenomenon. Propagators of these movements have used any mechanism available at their disposal in order to bring about the sociopolitical changes they desire. During the “Business Plot” of 1933, right-wing, big-name financiers used their abundance of money, weapons and connections to lead a force of veterans in an attempt to overthrow the former United States President Franklin D. Roosevelt.

The AI system is based on the premise that it can make predictions and understand patterns to influence a user’s choices online. The problem is that these predictions fail to have nuance or understanding of ethical boundaries outside of the creator’s original intentions. Because of AI’s current usage, it has the potential to be used by authoritarian parties for security and online applications, and to single out marginalized people in an effort to criminalize or misrepresent them to advance their agendas.

Examples of AI being used in this manner have already been seen. Dan McQuillan, author of “Resisting AI: An Anti-fascist Approach to Artificial Intelligence,” writes, “If a facial recognition algorithm is primarily trained on a dataset of white faces, for example, it will struggle when asked to recognize Black faces.”

If not exposed to the necessary data, AI could discriminately mark people who go through their algorithms, which malicious actors can use on a wide scale to further ostracize minorities or those unfavorable to the particular ideology they are pushing.

There is also a concern about the biases towards certain languages in AI data and large language models.

“AI is more biased towards English, but this is helped with larger language models,” Schwartz said. “There is still a gap between Western languages and poorer regions of the world.”

This gap prevents AI from reaching a level of cultural awareness necessary to reflect every culture outside of the West. This can be problematic when AI and algorithms sometimes create political echo chambers for users based on that user’s past data; this Western language preference also comes with Western culture and societal biases.

An example of this is when people get exposed to information that corresponds to their “interests, opinions, and beliefs while avoiding information that does not sympathize with or challenge their position.” Those who lack news literacy skills could receive this polarizing content that misrepresents other countries and cultures, which could lead to skewed representations of the world and assumptions based on what people only think they understand.

The question then becomes: should there be a push to include more of these languages in AI for the sake of inclusion even if those people do not want the representation?

This is still debated; although it could make for a diverse and accurate dataset, it may not be gathered in the most ethical way due to people in certain countries not using or wanting to use this type of technology.

With this in mind, if AI is an inevitable part of the technological future, there must be strict global guidelines and systems put into place to prevent AI tools from being used for authoritarian means.

There are several routes that can be taken to implement this system. One such route includes preventing AI from being a viable solution for some of the problems that fascist leaders may come across, like an audience who is willing to listen to misleading data that gives credence to their rhetoric.

This could be done through technology companies finding ways to avoid keeping their users in a political echo chamber, which could allow for radicalized and misinformed views. This can include ensuring that if AI models are asked questions on political topics, they only provide factual and accurate information so that their answers do not facilitate more misinformation. Third-party audits that can ensure that technology companies are meeting such standards, which would allow for a less biased, more structural way for this to be executed.

Another route would be to utilize government action in order to ensure that there are bounds within which technology companies must operate. This would be the most robust and straightforward way to make the changes needed to prevent the malpractice of AI because it would motivate companies to abide by ethical practices and would enforce notable consequences if their operations are not up to standard.

“Solutions must be on the policy level so that tech companies have incentives,” Schwartz said.

Policymakers should start looking thoroughly into what guidelines need to be enacted to ensure that tech companies all follow the same code of ethics. But Schwartz believes that most people working on this technology are headed in the right direction.

AI has the capability to be used in ways that benefit humanity, rather than damper people’s day-to-day usage online — many of which have likely not even been thought of yet. As this technology progresses, making sure that these devices are used for appropriate reasons should be a priority so they cannot be weaponized for the spread of misinformation or harmful ideas.