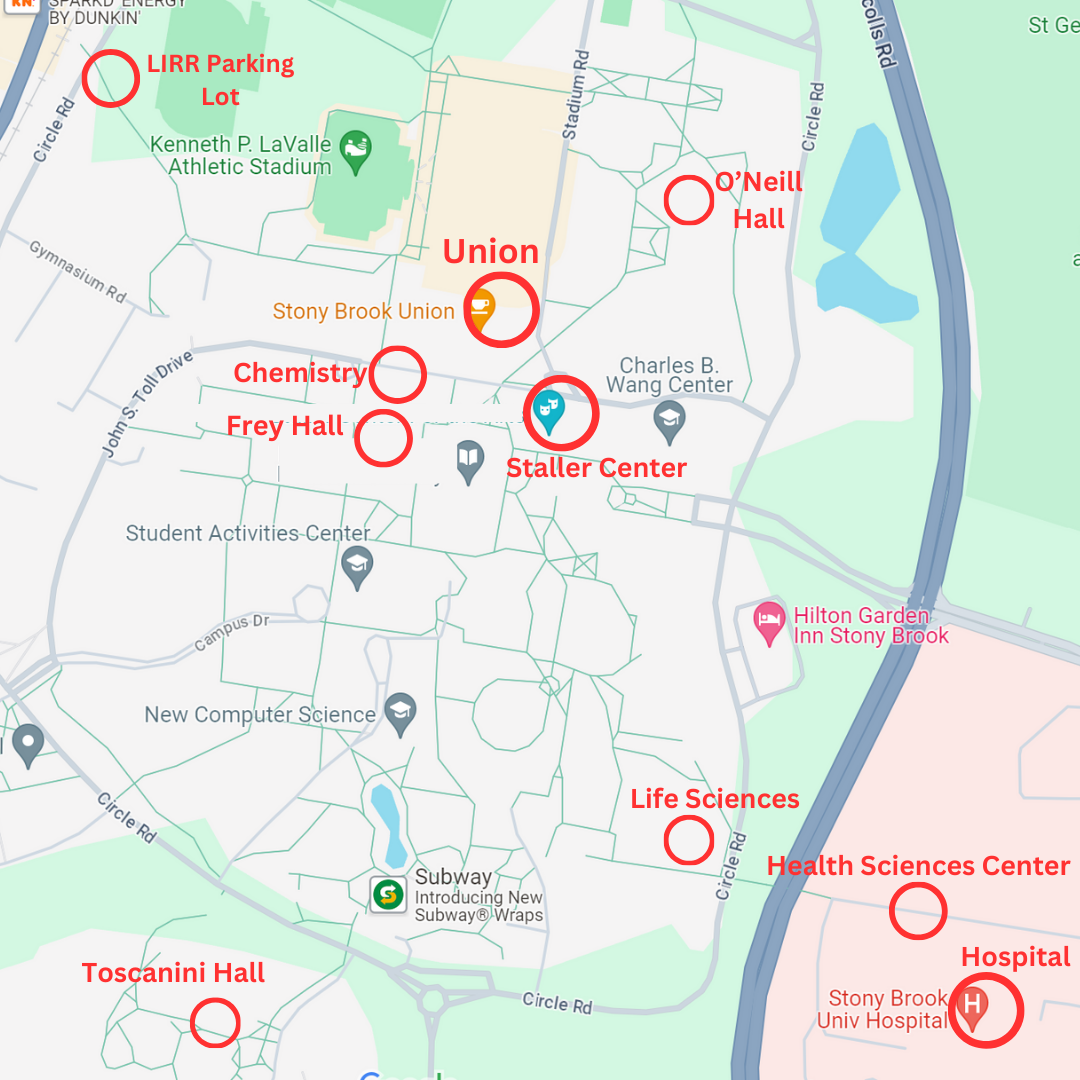

On Thursday, Feb. 2, Stony Brook University’s Academic Judiciary and Center for Excellence in Learning and Teaching held an online discussion panel, Trends and Tips: Assessment in the Classroom, to discuss the usage and integrity of artificial intelligence and its role in academic dishonesty.

“Academic integrity is not just the responsibility of the student. It’s the responsibility of the faculty and the responsibility of the administration,” Academic Integrity Officer Wanda Moore said.

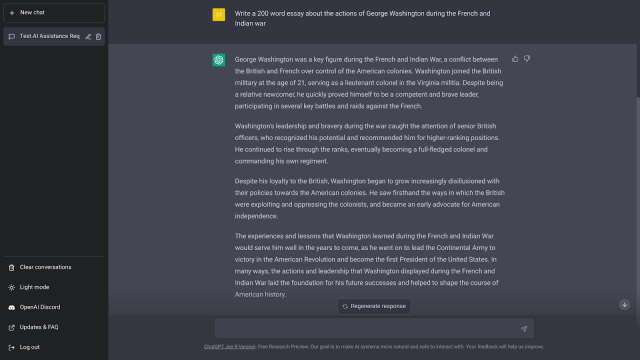

The panel’s discussion focused on a new artificial intelligence (AI) program called ChatGPT, which can generate large paragraphs of text in response to specific prompts. Over 400 people attended to learn about its capabilities, dangers and applications.

ChatGPT, which stands for Chat Generative Pre-trained Transformer, is a free chatbot developed by OpenAI and released on Nov. 30, 2022. It was adapted from its predecessor, GPT-3, OpenAI’s third version of a large language model.

A large language model is a computer program trained to interpret texts from users and then deliver appropriate responses. These models operate using a neural network – an algorithm that trains computers to recognize patterns using mathematical functions.

GPT-3 was trained to mimic human typing using an immense amount of data from across the Internet, resulting in ChatGPT becoming a highly realistic chat bot. It can give suggestions, answer general questions and draw basic conclusions, but it struggles to produce accurate statistics, identify real sources or cite legitimate quotations. It also maintains neutrality whenever it is asked for choices and opinions and has a limit of about 350 words per message.

However, this is by no means the end of GPT’s potential. The fourth generation of GPT, called GPT-4, is already in development. Most work being done is to improve its accuracy and response time. Though its release date is uncertain, it could be as soon as the first quarter of 2023.

All of this and more was presented during the panel discussion. Some professors showed potential applications of ChatGPT, using it to emulate famous writers and brainstorm essay ideas. Others discussed how ChatGPT and academic integrity can coexist.

ChatGBT generates unique responses, so students could potentially use it to submit work without being flagged for plagarism. However, using the application is still considered academic dishonesty because it is not the student’s original work.

The panel did not issue a definitive statement on the integrity of ChatGPT. It did give teachers information and guidance on how they should model their own policies towards this new tool. Professors were also told to craft assignments that required thought from students which AI could not replicate.

Many professors have already issued statements on the use of AI tools in their syllabi. Some believe AI-generated work is not a student’s own work, while others view it as a tool that should be used but not abused.

Allegra de Laurentiis, a professor of philosophy, is against students using AI tools in her class.

“I am very concerned because in the humanities, it is essential to know that the products of a student’s research are their own products,” Laurentiis said. If we cannot be sure of that, everything changes.”

In her classes, using AI is treated as plagiarism and she “would do as she always did in the past and inform the Academic Judiciary.”

Reuben Kline, an associate professor of political science, thinks that education should adapt to AI rather than ban it.

“We need to learn ways where we can have students still generate interesting text and be able to evaluate their conceptual and cognitive contributions to the textual output,” Kline said. “I really see that as the only way forward.”

In Kline’s classes, students are allowed to use ChatGPT, but he would like to be informed beforehand.

Owen Rambow, a professor of linguistics and advanced computer science, has mixed opinions.

“If the goal of the course is to specifically learn how to write, I think it would clearly be cheating,” Rambow said. “If it’s a history course and the goal is to show you can think analytically and read documents and synthesize them, then maybe this model where someone just jots down ideas and GPT-3 formulates them would be adequate.”

Stony Brook students also have varying opinions on ChatGPT.

Raymond Chen, a freshman mechanical engineering major, says it’s “a helpful tool,” although he is not sure what an acceptable usage would be. His WRT 102 teacher has told his class AI is not allowed, but Chen thinks it would be impossible to detect and enforce.

Cheily Valentin, a sophomore biological engineering major, thinks “it will make it easier to cheat, but it could also be used in a good way to learn more about specific topics.” Her teachers have not addressed ChatGPT, but she expects it to be banned by the university.

Stony Brook is not the only institution that is trying to solve the problems ChatGPT poses. In January, New York City’s education department banned ChatGPT from all their schools and removed it from their devices and networks. Numerous other school districts across the country from Montgomery, Ala. to Seattle, Wash. have also put restrictions on ChatGPT.

But because ChatGPT is extremely realistic and adaptable, it is very difficult to detect when it is being used.

“The problem is that if we develop software that allows detection, another person could train a model to bypass that detection, resulting in a cat and mouse scenario,” Rambow said.

Additionally, AI is already being used in professional environments. Writers from the Washington Post tried using it to reply to company emails. Forbes is warning people with writing-centered jobs about being replaced by AI. A company called DoNotPay is even attempting to create an AI lawyer. Stony Brook is committed towards preparing its students for the future, and it recognizes that ChatGPT and tools like it are a part of that future.

“Eventually it will be a common tool, possibly very soon, that’s used to assist in writing basically everything,” Kline said. “The people who don’t use it will be disadvantaged. We teach students how to succeed in future workplaces. Future workplaces will certainly involve using tools like Chat GPT.”